Kan Yilmaz, Co-Founder at Haystack, on Metrics for Engineering Teams

Published on Feb 10, 2021

12 min read

Vidal: [00:00] Good morning today I have with me, Kan Yilmaz. Welcome to ManagersClub.

Kan: [00:06] Thank you, Vidal.

About Metrics

Vidal: [00:07] Kan you are an expert in engineering metrics. I was really impressed that the discussion we had about engineering metrics last week. Could you tell people how did you get so interested in engineering metrics?

Kan: [00:20] It wasn’t specifically about engineering metrics. What made me interested in this topic was there were engineers, and we are proud to say that we are logical. We acknowledge data, and we make decisions better based off of data. But once we look into an engineering organization, the application yes, we have lots of data, and we make really good decisions on the application.

But once we go into the process of how do we actually do this engineering process? We don’t have the data, and we don’t use anything. Everything is mostly based on gut feeling. And that made me uncomfortable. I wanted to figure out why engineering among all different organizational teams, such as sales or marketing, did not use any data, whereas every single other one did. So I went into that problem, and that led me to metrics itself.

Vidal: [01:19] How long have you been working in this field of engineering data and metrics?

Kan: [01:24] So I had been working on this for more than a year and a half full-time talking to people of understanding why they are using metrics. What is the reason and how does it make our lives better?

The Goal of Metrics

Vidal: [01:39] What is the goal of metrics? Why should we care about these?

Kan: [01:43] Why do we track anything? Why do we have metrics? The base reason is to align people to a specific goal. For companies, this is revenue. We have a single goal, to make sure that the company is profitable. That is our metric to track.

And this goes into every different even if you’re like having, let’s say you want to lose weight, you have a goal. And the metric represents that, which is how many kilos are you at the moment? For engineering, this was missing. So I was really interested in what should the engineering organization track.

Vidal: [02:21] You’ve also spoken with hundreds of engineering managers. And that’s how we connected. What are some common mistakes or misunderstandings that managers have on this topic?

Common Mistakes

Kan: [02:32] So there are a few mistakes that they do. The first one is the obvious one, which is big brother. You’re tracking all of my actions, and that sounds like big brother. You don’t want to be tracked — it feels from an engineer’s perspective, it doesn’t feel good. And there’s a reason for this. People have tracked engineers with the wrong metrics. There’s this guy called Eliyahu Goldratt. He wrote the book, The Goal. And in his book, he specifically mentioned that if you track anybody with metrics that can not control that they won’t care.

And that is what happens in engineering metrics. They try to track engineers with metrics that they don’t control. That is the biggest mistake that I have seen. The second biggest mistake is tracking metrics, which doesn’t and result into the organization success.

So an example of this is the number of commits. That has no correlation whatsoever with the company’s success, and a lot of companies track the number of commits and other ones lines of code. These are numbers. You can track them, but it doesn’t mean anything, and it won’t make your company successful because of this. The engineers themselves — they know it internally. They know that it doesn’t represent the company’s success and they feel like “I’m just being tracked by an arbitrary number.” And it goes back into something that doesn’t matter.

So you need to make sure that you’re tracking two different things. One is that the team can control those metrics. The second point is those metrics actually align with the company’s goals.

Vidal: [04:13] I think that’s a great point. Yes. If you can’t really control the metric, what do you, what are you going to do? Just pray that it changes?

Two Best Practices

Vidal: We talked about some of the mistakes people make. What do you understand to be some of the best practices? What should people do?

Kan: [04:26] Specifically for engineering teams, I will be by default, I’ll mention about product engineering. That is where we have figured out what metrics to track. I don’t know about R & D or other departments of engineering, so there’s a distinction between that for product engineering, what are the best practices of tracking.

It goes into the team level. If you track the individual level, what happens usually is you don’t get the signal of the process. You get the signal of the person, and it’s a shaky number. A person might work hard today, but tomorrow they might do design, and the next day they might actually have lots of meetings.

And if you try to track it by a single number, you’ll get lots of spiky graphs and that won’t result into actionable results. But if you do that in team level, You will actually get a really good understanding of what the process is. And if I do any kind of iteration in my process, you can see that change in that team’s habits and in that team’s metrics. So that is one best practice that I can say. Don’t track individuals, track teams.

The second best practice is, this goes into the mistakes as well, make sure that the team can control the metric itself. Most of the teams that I have seen tried to put product metrics inside engineering organizations.

How I will describe it in this way, monthly active users. I have seen quite a few engineering teams being judged on monthly active users. There are other metrics such as JIRA tasks completed, or it’s usually goes into story points completed. Story points completed is again, it looks fine. You think that it represents the delivery of that specific team.

The problem with story points is it’s inaccurate. It’s inaccurate because the engineers do not fill it in at the correct time. And this produces such a chaotic environment where you don’t get actionable insights from tracking story points to be able to do that.

There is a workaround. It’s not like you cannot do it. You can do it. You need to make sure that story points are accurate and people are not gaming. So there are, those are a bit hard problems to solve. I have seen people solving those problems but I would generally recommend not tracking product-related metrics for engineering.

There are much, much better metrics to track. We can go over that if you want.

Metrics for Product Engineering Teams

Vidal: [07:00] I’d like to. I think that’s a great point you make about confusing the product metrics. What are some of the appropriate metrics to track for product engineering teams?

Kan: [07:10] Okay. Let’s go from the fundamentals again. Back to the conversational starting point. I said the metrics they need to represent organization success. What that means is decreased churn, increased revenue. What metrics represent this?

In product engineering, imagine a car. This is the product itself, the organization itself. The driver is the product manager. They can make decisions on where to go on the road. But they don’t decide on the speed. The speed is decided by engineering. The engineering organization is the engine of this car. And how do we make sure that the car goes fast?

That is the number of successful iterations. That is the core metric that product engineering teams should track. What does the number of successful iterations mean? The number of iterations is quite obvious in the sense of, okay, if I do a deployment, then I did an iteration. I gave value to customers.

But if you just track that metric, what happens is we have seen this in multiple companies. The engineering manager says, “Hey let’s iterate faster. How can we iterate faster?” And the team response. “Let’s not write tests. We spend 20% of our time writing tests…” and that doesn’t work out. Then you have a faulty product.

It’s not a successful iteration. The customer didn’t get value from that deployment. You need to make sure that the iteration is fast and it’s successful. So what are the metrics to track these? I would Put this into five different metrics. I’ll take one step back. I’ll represent this into two different categories.

One product engineering, second DevOps team. I will focus on product engineering and they have four different metrics. It divides into speed and quality. Just like I said, number of successful iterations. The speed part is deployment frequency and cycle time. Deployment frequency is how often do you deploy? That’s quite obvious by itself.

Then we go to cycle time. Cycle time can be tracked into different ways. Some people track it from JIRA, a single task. How long did it take for a single JIRA task to complete? The second way to track it is how long did a single pull request take to complete by complete I mean start to deployment.

In pull request, you can do this by first commits to production. In JIRA, it can start from triaged to production as well one nuance there is the JIRA. One might not be accurate because engineers it’s like an extra process. It’s not in their habits to track.

Make sure that the JIRA task is correctly tagged, but Github one pull requests. It’s in their habit, it’s in their workflow. They make sure that pull request is tagged correctly because they don’t actually control the tag. They just behave normally and you can get that data from it. So I would recommend specifically focusing on pull requests, but JIRA is also a way to track cycle time.

So what I have talked about was speed, deployment frequency, and cycle time. Now we can go to quality metrics, which also divides into two different categories. The pair of deployment frequency is hotfixes. It is called change failure rates and the change failure rate is the percentage of hotfixes is divided by deployments.

So imagine you had five hotfixes and hundred deployments. So that’s five divided by a hundred, 5% change failure rate. That’s a great number. There is a really good analysis on this by a book called Accelerate, they have done research on thousands of different organizations, and they discovered that zero to 15% change failure rate is a good number to obtain. So speed deployment frequency. The quality one is change failure rates, hotfix deployments. Then we have cycle time. What’s the pair of cycle time in quality? This is the number of bugs. Number of bugs most people track it. And it’s quite common in most organizations.

The mistake that I have seen most in tracking number of bugs is they just track number of bugs. That’s it. Then what you get is okay it was 200 now it’s 250. Now we’re staring at 20 so what? You can’t make any actionable decisions based on just tracking number of bugs. There are two. points that you need to make sure you handle regarding that aspect.

One dimension that needs to be tracked is the severity of, or the priority of the bug P1, P2, P3. Most companies do have this, some track, some don’t, but that’s a really good indicator of how quality is your application dimensions in priority. The second dimension that I would recommend tracking is teams. That most like almost no company tracks this, the ones that track it are usually the best companies.

And what this gives is the result will look like this. You have 20 bugs. Between these, 12 of them belong to team A. Eight of them belong to team B. Then you can check team A actually has zero priority, one bugs, but team B has six priority one bugs. Then you can actually conclude that team B needs more resources on handling this quality or technical debt.

But team A is actually a much better position, even though team A has more bugs than team B. You can have this actionable insight on quality if you track it by dimensions and priority.

Vidal: [00:13:04] Wow, this is awesome. This is just really interesting what you’re sharing. And I love how you pointed out the point about successful iterations. Yeah, because just iterating by itself may not be valuable. And I totally agree with you tracking Github versus JIRA because developers are notorious for not updating JIRA, so you’re going to get some lag in that signal.

Metrics for DevOps Teams

Vidal: You also mentioned DevOps. Can you speak briefly about DevOps metrics?

Kan: [13:30] Sure. So DevOps is slightly different than product engineering. They don’t exactly have an impact on the value delivered to customers. What they do have is their customers are engineers themselves. Going back to. The premise of what is the fundamentals of tracking metrics you need to give value to your customers and for product engineering that is the number of successful iterations, but DevOps, it’s actually slightly different because your customers are engineers themselves. Your goal is to make them more productive. How can you make them more productive? You can build infrastructure that helps engineering teams. They’re your customers. The most common DevOps metric is deployment frequency again.

If deployment frequency takes too long because of the infrastructure gap, then that is DevOps team’s responsibility, not product team’s responsibility. So I would say implementing CD, CI, trunk-based development practices. And there are like a bunch more best practices that dev ops teams can do, which will impact on the infrastructure of the whole product engineering organization.

And they can deliver value faster to their customers that is on the speed side. Now we can go back to the quality side. The product engineering team, once they do a deployment, they have the stress. Okay. If it went wrong, what do we do? We need to do like a revert. But how long does it take to do a revert? So it, it gives us the stress.

So the more, the harder it is to revert a deployment, the harder it will be for the product engineers to do a deployment. They will make sure they’ll test it three times, four times, five times. They’ll stress over it. That is not a good experience from a product engineering side and decreasing that number, which is what we call meantime to recovery.

How long does it take from a deployment to recover back to normal state? For great teams, this is in seconds. In some teams, it might take half an hour, one hour if they’re manually deploying it. So that infrastructure is the DevOps team’s metric. And if they focus on the speed and the quality, which is the deployment frequency infrastructure, and how fast can you revert a deployment?

Then those are two really good metrics for DevOps teams to track.

Further Resources on Metrics

Vidal: [16:00] Kan, this is just amazing what you’ve shared. I really appreciate you coming on to share this with the readers and listeners of ManagersClub. I think that we’ll get a lot of value out of what you’ve shared. If people want to go deeper, if they want to learn more about this topic or, connect with you where should they go?

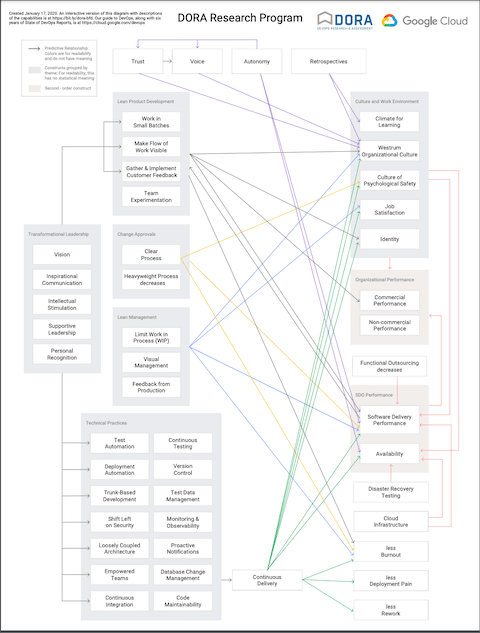

Kan: [16:17] Sure. Regarding this topic, there are really good books. There is one research made by Google. It’s called DORA, “D O R A.” DORA Research: https://www.devops-research.com/research.html. You can check it out. They have done basically the survey on these metrics on multiple enterprise organizations to small business organizations and they have really good numbers on what should we aim for.

You can check out on DORA every year they released their own report.

Second is I would recommend Accelerate book. The Accelerate team actually is responsible of DORA report as well. They are I think the first ones to discover these metrics that I mentioned. And I would definitely recommend all directors reading the Accelerate book.

Regarding tracking these metrics, we’re building a tool called Haystack. usehaystack.io is how you can reach me, or you can reach me from kan@usehaystack.io. I’ll be happy to help you on how to track metrics and how to figure out what practices work for your team and how can we make your team align on specific goals.

So yeah thank you for having me here Vidal

Vidal: [17:32] That book is from Jez Humble is like a really good book. I met him once he came to speak at an event, and that’s a great book to recommend.

Thank you so much for coming on, Kan. I really think what you shared is very interesting, and you’ve inspired me to do a better job at looking at metrics myself, so thanks again.

Like this episode?

Subscribe and leave us a rating & review on your favorite podcast app, or reach out with any feedback.

Join our LinkedIn group, where you can discuss and connect with other engineering leaders.